Also available in:

Deutsch (German)

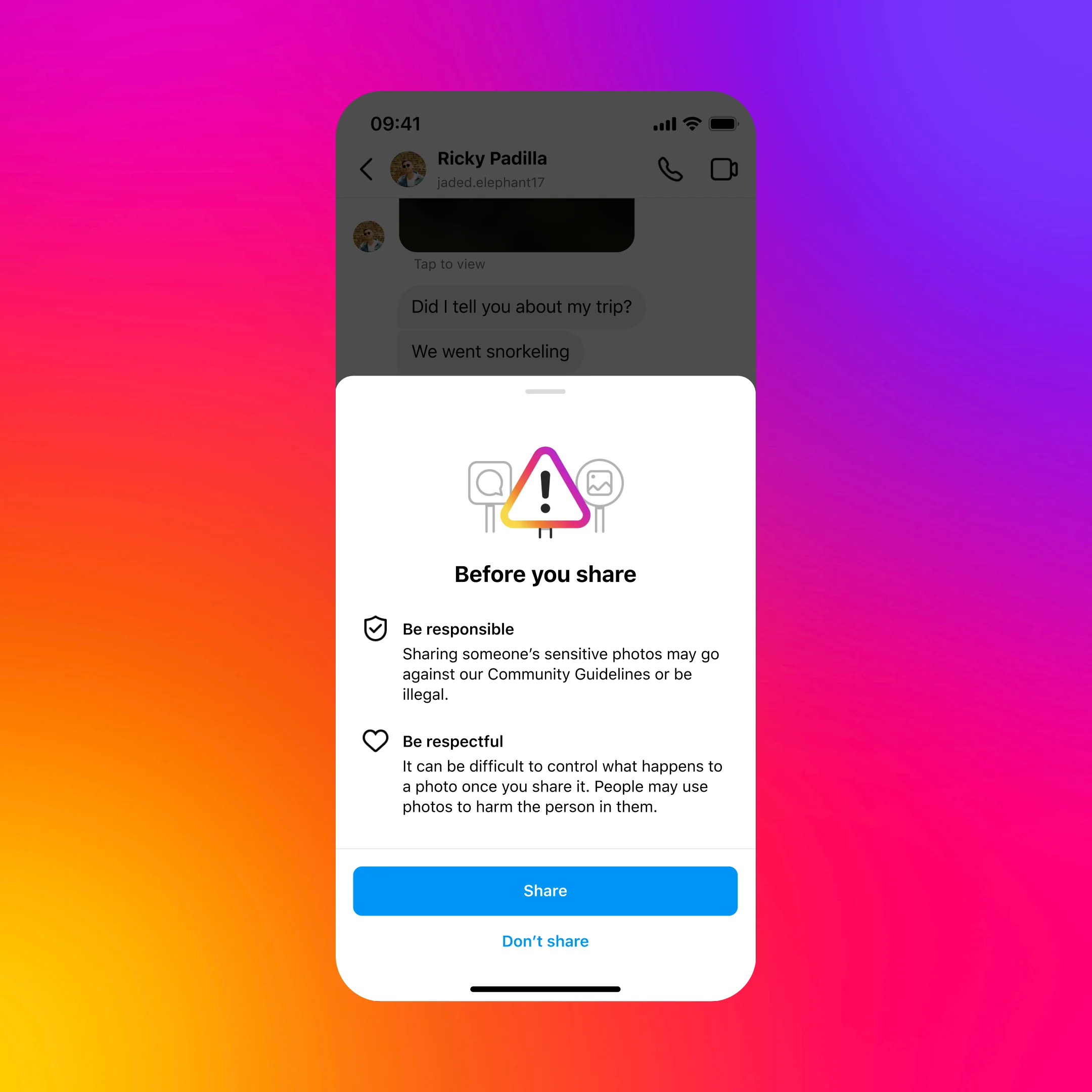

A new tool is being used on Instagram which, according to the operating company Meta, is designed to protect users from sextortion and the abuse of nude photos. The new mechanisms are AI-based and are used for DMs, i.e. direct messages from user to user. The tool intervenes in private communications and checks them for possible nude photos. If the check is positive, the sender first receives a warning or is asked whether they really want to send the photo. If the photo is sent anyway, Instagram automatically blurs the image. The recipient then receives a warning or is asked whether they really want to see the photo. At the same time, the sender and recipient of the message are provided with tips from experts to explain the potential risks.

The tool for automatically checking private messages should be automatically activated for young people under the age of 18; adult Instagram users are notified of the option and can switch it on or off. According to Instagram, the analysis does not take place on their server, but on the user’s local device, i.e. usually their cell phone. This means that detection also works for end-to-end encrypted chats. According to Instagram, it does not have access to the images until a user reports them.

At the same time, new pop-up messages are to be introduced for users who have communicated with profiles that have since been blocked for sextortion. These messages will then lead to further help texts.